Scientific applications often have a broad range of real-world variations—data bias, noise, unknown transformations, adversarial corruptions, or other changes in distribution. Many of LLNL’s mission-critical applications are considered high regret, implying that faulty decisions can risk human safety or incur significant costs. As vulnerable ML systems are pervasively deployed, manipulation and misuse can have serious consequences.

A sustainable acceptance of ML requires evolving from an exploratory phase into development of assured ML systems that provide rigorous guarantees on robustness, fairness, and privacy. We’re using techniques from optimization, information theory, and statistical learning theory to achieve these properties, as well as designing tools to efficiently apply these techniques to large-scale computing systems.

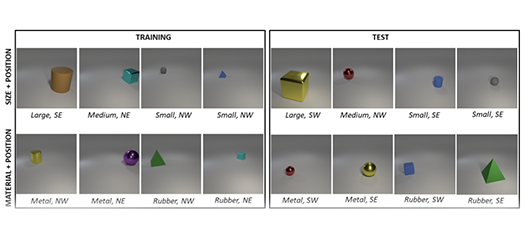

Attribute-Guided Adversarial Training

More research accepted at AAAI 2021 enables DNNs to be robust against a wide range of naturally occurring perturbations.

Uncertainty-Matching GNNs

This paper, accepted at AAAI 2021, introduces the Uncertainty Matching Graph Neural Network aimed at improving the robustness of GNN models.

Feature Importance Estimation

In this paper accepted at AAAI 2021, a research team describes PRoFILE, a novel feature importance estimation method.

Advancing ML for Mission-Critical Applications

As our booth and poster at the 2020 LLNL Computing Virtual Expo explained, our work is a partnership between ML, HPC, and Lab programs.

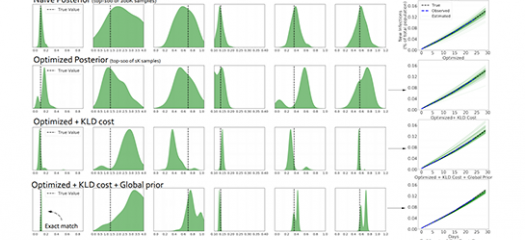

Calibration with NN Surrogates

Calibration of an agent-based epidemiological model (EpiCast) uses simulation ensembles for different U.S. metro areas. Peer review pending.

Meet a Data Science Expert

Jay Thiagarajan's research involves different types of large-scale, structured data that require the design of unique ML techniques.

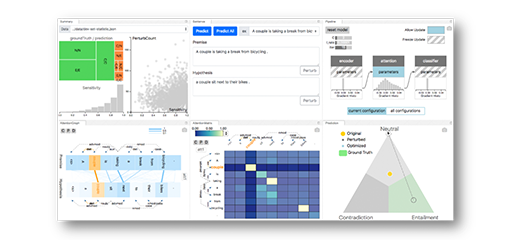

NLPVis Software Repository

NLPVis is designed to visualize the attention of neural network based natural language models.